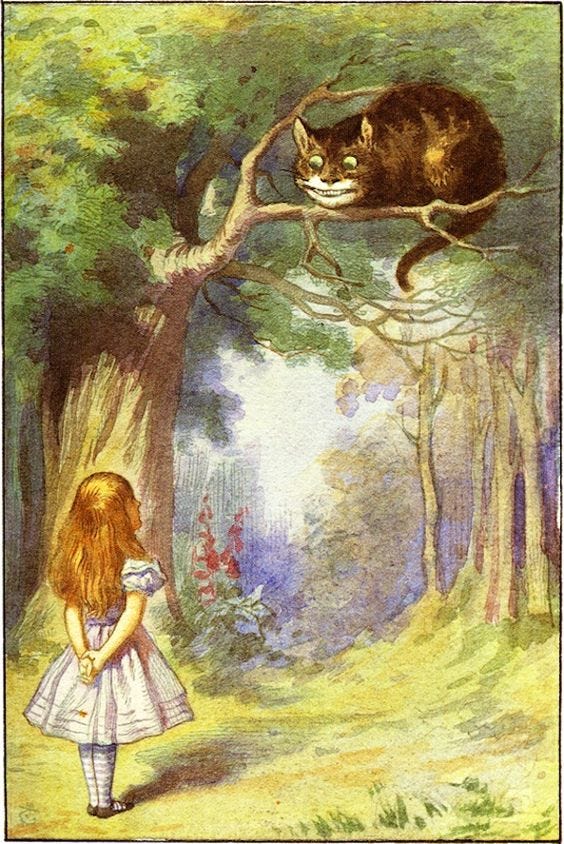

“Alice: Would you tell me, please, which way I ought to go from here?

Cheshire Cat: That depends a good deal on where you want to get to.

Alice: I don’t much care where.

Cheshire Cat: Then it doesn’t much matter which way you go.

Alice: …So long as I get somewhere.

Cheshire Cat: Oh, you’re sure to do that, if only you walk long enough.”

Like Alice, most organizations, and most people, have goals that haven’t been articulated clearly enough. I call these rough ideas “underspecified goals” — we only sort-of know what we want. That’s normal for any complex process; when writing, my ideas coalesce only once they become more concrete. Novelists sometimes say that the story got away from them, when the characters behaviors don’t lead to the outcome the author had initially imagined. This can lead to slight narrative flexations, or a full out revolt of the characters.

This happens outside of writing as well, and specifically, in organizations. But it isn’t always a handicap. An explanation of why and how it happens is required to know when this underspecification is benign, or even useful, and when it’s harmful. And that understanding, in turn, will lead us to some conclusions about how, in the latter case, we can mitigate the problem or fix it completely.

Complex systems are like the weather; everyone complains, but nobody does anything. This is certainly due, at least in part, to the incentive to simply stay indoors when forecast metrics suggest it will be uncomfortable outdoors.

The bad news is, if you’re trying to run a human complex adaptive system like a company, “staying indoors” is not an option. The good news is, your goals can be more ambitious than that, even if you’re not sure what exactly they are. The lack of goals isn’t immediately fatal; it’s rarely even a noticeable handicap for organizations, as long as they don’t mind wandering.

As I noted in my post on metrics, “Building systems using bad metrics doesn’t stop their self-optimization, they just optimize towards something you didn’t want.” Underspecified goals interact with this process in an interesting way.

Strong-Willed Stories, Startups, and Metrics

Like stories, systems that self-optimize towards “something” are going to progress past obstacles towards the goals, albeit underspecified. Also like stories, unless carefully guided, the optimization can head in unexpected directions, due to internal tensions and the actions of strong-willed characters. That’s because organizations have a harder job than just setting goals, or building metrics; they need to align metrics with goals and organize themselves . And if the goals are underspecified, that’s much harder. Unless the structure is already controlled, any coalescing of goals into measured, well-regulated systems will be warped by their own metrics.

This is a central challenge when transitioning from a startup to a corporation, which is a challenge much like that of expanding a short story into a novel. A startup that has product-market fit is like a short story, and as Joseph Kelly suggested in his post Startups, Secrets, and Abductive Reasoning, the plot needs to coalesce around something more specific than “figure out how to make money, and then do that thing.” In expanding the plot to novel scale, it’s easy to add frills that detract from the story, or let it wander away from the intended plot.

The misalignment between the self-optimization of a system and the underspecified goals is exactly what happens when metrics distort behavior. That’s because, while metrics are subordinate to goals, they work in tandem. The behaviors of bureaucracies emerge because of this mismatch, and it can’t be overridden — even when it’s clear to everyone involved that the behavior is maladaptive, or even insane.

If organizations don’t properly specify goals, their metrics can’t compensate. If their metrics don’t support their goals, goals will get lost in the organizational shuffle. Let’s make this a little less abstract, using Twitter usage as an example.

Twitter Usage and Metrics

I use Twitter fairly heavily. I find it to be a useful tool, and a fun academic diversion, but my behaviors on Twitter tend not to be particularly goal-oriented. And since I haven’t specified my goals, I find my actions being inevitably pulled towards measurable achievements; number of followers, and number of retweets, “engagements” and views. Mostly, these metrics align with what I’d like — to be saying things others find interesting and find people whom I enjoy interacting with.

That’s not particularly a problem — but at some point, I noticed it distorts my use of twitter in interesting ways. For example, I posted a couple links to some of my work, and realized that my engagement was much higher when I included a screen capture of an interesting quote. This seemed like a great idea, until I dove a bit deeper into the analytics and realized what was happening: people would click on the image to read it, but not visit the link. In fact, the image was increasing “engagement” at the expense of people actually reading the thing I was linking to — it was counterproductive. Similarly, I’ve noticed that snarky or clever comments can get retweets and likes, but creates very little engagement with people I’m interested in talking to — and for them, it probably decreases the signal-to-noise ratio in my tweeting.

This exactly mirrors the problem with underspecified goals in organizations — it creates a bias towards reliance on whatever is easy to measure, even when we’re only talking about a single person. This only gets worse when it’s not just the different voices in your head that are warping behavior.

“Easy to measure” is a mediocre qualification for what to aim for when making decisions. So why is it one of the most common ways to construct metrics? Perhaps because it is easy. But the ease with which we can specify goals that strongly corral the system makes them overpowered. When you make only one part of a system stronger, it breaks the rest, unless the other parts are strengthened to compensate. In writing fiction, you need to balance the strengths of the protagonist with corresponding strengths of their opponent; as Eliezer Yudkowsky put it, “You can’t make Frodo a Jedi unless you give Sauron the Death Star.” In an organizational context, these overpowered, easy to use metrics overwhelm typically underspecified goals. Fixing this mismatch requires a powerful countervailing force to compensate, in the form of a mission.

Organizations and Goals

There is usually one person nominally in charge of a company, and they usually have both ideas about what the company should be doing, and the authority to try to enforce those ideas. Unfortunately, central authority simply doesn’t scale when imposed from above, without structures to support and align the goals. The various failures of command-and-control communism illustrate this — you can’t manage complexity by imposing rules from a central authority. Startups typically figure this out the hard way, since the loose structures of a small group are incapable of scaling, but the failures don’t really show up until you get a larger system. That’s when you see herd of people rampaging through different levels of the system, all with different needs, pushing in different directions.

The pressures that distort even simple goals in complex settings build from unanticipated needs, unguided by the unclear, underspecified goals. This is a result of something explained more clearly and concretely, if even less succinctly, in the late James Q. Wilson’s book Bureaucracy. For now, I’ll just quote his definition: bureaucratic organizations are “a natural product of social needs and pressures — a responsive, adaptive organism.” I’ll return to why this is true, and his explanation, but clearly companies adapt and respond to their complex environments.

These environments exist at multiple levels, both internally and externally. The motives of an organization, to the extent they are coherent at all, are the result of the goal-orientation of all of these smaller parts. These small-scale goals combine in complex ways and can work at cross purposes, and it’s easy for them to lose the plot.

This does not mean you devolve into chaos. The study of most complex systems shows that disparate goals can lead to harmony despite conflict; alignment in the absence of clearly imposed goals, organizational or systemic, is clearly possible. For example, capitalism, at its best, uses competition to harnesses the desires of individuals to achieve a synthesis that is better for everyone. As Adam Smith put it,“It is not from the benevolence of the butcher, the brewer, or the baker that we expect our dinner, but from their regard to their own interest.” A contrasting example is natural ecosystems, which maintain a complex balance among species and achieve remarkable stability, but not for mutual benefit.

But these outcomes are adaptive, not goal-directed. How do you add goals?

In order to manage a complex system like an ecosystem, an economy, an environment, or a business, you need to understand it well enough to know what to change, and what to leave alone. As a rule, if you cannot model a system with enough fidelity to predict it, you cannot control it. The implication in my last post was that only perfect models are able to capture a system well enough — and that’s partly true. But when planning and building a system, the Nirvana fallacy is unhelpful; perfect models don’t exist. In human systems, as opposed to physics or engineering, the goal is managing complexity without perfect models.

Going back to the previous post yet again, coordination of complex systems is hard, and requires models to understand the system, and techniques to monitor and motivate — but harnessing diversity in service of a clear goal requires first having a clear goal. These come in two well-known varieties: BHAG and SMART.

Goals – SMART or Big, Hairy, and Audacious?

“A true BHAG is clear and compelling, serves as unifying focal point of effort, and acts as a clear catalyst for team spirit. It has a clear finish line, so the organization can know when it has achieved the goal; people like to shoot for finish lines.”

— Collins and Porras, Built to Last: Successful Habits of Visionary Companies

The advice given to employees setting goals is rarely for them to be audacious. In fact, in most companies, individuals are told their goals should be SMART — Specific, Measurable, Achievable, Realistic, and Time-bound. This points to a critical conflict — and the chasm that exists between individual goals and big, hairy corporate goals is where it unfolds.

This was part of Peter Drucker’s insight when he came up with SMART goals. Big goals appear to be accomplished by a centrally coordinated huge effort — but they aren’t, they are segmented into smaller and clearer short term goals. Instead, when the big hairy audacious goal can be split into slightly smaller and less hairy subgoals which are individually more tractable, that’s where corporate structure is useful. The individual groups within the organization can and should have some specific subgoals, aligned with the management structure. SMART goals are ideally able to bridge this gap, because managing and setting metrics centrally is very complex. Again, as I explained, metrics work because they help ensure that the tasks aligned the intuition of the workers with the needs of the company, create trust between workers and their management, and reduce the complexity of larger goals into manageable steps.

This is what unites the question of how companies scale with the question of how metrics work — but it ignores the current dilemma, of how to align them. Each SMART goal can be atomic and simple, but how does that help ensure that the parts of an organization that might conflict are kept aligned? This is the job of management, weaving disparate parts into a coherent whole using well-specified overarching goals. The problem is that many organizations don’t do this; many simply fail to set real goals entirely, and instead play Calvinball.

Chess, Life, and Calvinball

My favorite quote about decision making comes from Timo Koski and John Nobel’s book on Bayesian networks; “Decision theory is trivial, apart from computational details (just like playing chess!)” The claim being made is that making decisions is “simply” about picking the options that lead to your goals. In chess, checkmating your opponent is the obvious goal, but it is far from clear how to do so. Finding out what set of moves will lead to checkmate requires investigating a combinatorial explosion of possibilities. There are 197,742 possible board positions after only two moves, checking all of which is still feasible— but of these, only two are checkmate, so most haven’t achieved the goal.

And life isn’t chess. As Moravec’s Paradox would have it, goal setting is so easy, we have no idea how to do it. That means that people do it unconsciously, and formalizing it into a organizational system is hard. In fact, most organizations, especially ineffective ones, are run like a game of Calvinball; “you make up the rules as you go”. They change arbitrarily based on the whims of those in control, and not everyone needs to agree to them.

The problem here is that decision theory starts from a point fairly far along in the decision process. For it to be useful, you need to have a clear idea of what the rules are, and what your goals are, before you can start. Without that clear idea, even if we can list all the choices, even the trivial part of picking the best option is a mess.

Life is a human-complete problem, where specifying the goals and rules fully is part of the game, and the goals are constantly evolving. But organizations don’t need to be quite that sophisticated. They don’t need to find the meaning of life, and can manage with slightly more modest, if still hairy, goals. But when corporations have underspecified goals, they drift, and when they have no goals, they are deeply lost. People fitting into a system that is drifting or lost forces people to invent their own justifications and find their own way.

Of course, there are exceptions. Paul Graham has a simple goal for Y-Combinator that squares the circle nicely; start companies that wouldn’t have gotten off the ground otherwise. They did it because it seemed like a cool hack. And in fact, most companies start in this way — there is a cool idea, and they want to push it forward. But Graham’s success doesn’t offer a useful paradigm for the general case, because Y-Combinator doesn’t need to scale, they can incubate others to do so instead.

For most companies, making the cool idea work, and becoming profitable, is where scaling comes in. As they become corporate, metrics take over, pushing in new directions, dictated by internal logic, not your goals. And that’s when clarity is critical.

Visions of Scaling Under Pressure

In navigating the decision to go corporate or go home, one of the costs of going corporate is that resources needs, for a startup growing beyond infancy, require alignment with external systems. Startups need to satisfy the needs of their funders, of the market, and of their employees. Some organizations are created ab initio in this condition, like government agencies, which is Wilson’s focus, or joint ventures between established firms. They have different types of problem, but I’ll start by focusing on a problem unique to startups — the loss of their vision.

Even early on in the life of a startup, most potential employees are either looking for a big payout, or a stable job. They aren’t pushing your vision, but if your vision aligns with building a successful company, as it needs to, that doesn’t matter. But, as I argued before, as you scale, you need to build a less flexible structure. That structure requires delegation, and loss of direct control. This means, the use of metrics to replace trust will lead to a disconnect between the goals and the metrics used.

Similarly, outside capital comes with the goal of maximizing returns — not fulfilling the vision. Dan Schmidt wrote about this in the context of the show, Silicon Valley : The vision of a cloud-based machine learning tool that uses their amazing compression algorithm turns into “the box,” because pressure from the outside leads to compromise of the vision. The BHAG was lost under internal pressures that result from underspecified (or under-communicated) goals.

This is true because, as noted before, the actual behavior of an organization is dictated by all the layers of the organization. Unless the incentives exactly align with the goals, the metrics will twist the system away from the goals— but how this pressure distorts the organization is contingent on many other factors. This is where Goodhart’s law fails to be specific enough.

Goodhart, Conant, Ashby and the Limits of Laws

In the last post, I cheated by paraphrasing Goodhart‘s law as saying “When a measure becomes a metric, it ceases to be a good measure.” In fact, it was a more precise statement that was stated explicitly in his paper; “any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes.”

Credit for Goodhart’s law in the form we cite it (it’s a long story) is probably more due to Donald T. Campbell, who said; “The more any quantitative social indicator is used for social decision-making, the more subject it will be to corruption pressures, and the more apt it will be to distort and corrupt the social processes it is intended to monitor.”

The original claim by Goodhart, “any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes,” is false, at least sometimes. And Campbell’s Law doesn’t fare much better — there are clear reasons for the collapse of the regularity, and/or the corruption pressures.

Without getting into the math, let me sketch out why, when a regulator attempts to control a system, the agents begin breaking the statistical regularity. And in order to understand why it breaks, it’s useful to first understand when and how it emerges in the first place. Once we understand that, we can lay out what it means for companies trying to achieve goals.

To understand when and how regularities emerge, you can consider a thought experiment. Negate the problems I laid out in the earlier post about why metrics fail; eliminate the need for trust, don’t simplify complexity, and represent everything correctly, and you’ll notice that metrics now work. In fact, that’s exactly what Roger Conant and Ross Ashby proved in the eponymously titled “Good Regulator Theorem,” in their paper, “Every good regulator of a system must be a model of that system.” With remarkably few assumptions, Conant and Ashby prove that in order to regulate any system optimally, you need to know what will happen in every state.1 That means a regulator needs to be able to predict the system perfectly, thereby creating a model “isomorphic with the system being regulated.”

This theorem is fundamental in control theory, where it is known as the internal model principle. For systems simple enough to model with high fidelity, it provides useful guidance for engineers, but what about complex systems?

In complex systems, somebody is in control of some part of the system, and wants to use that control to guide overall outcomes. They need to control the system, but can’t do it exactly — they never have a Conant and Ashby “good regulator” of a complex system, since models are approximations. But the part that could be modeled in an idealized world still exists, and is the source of the regularities that Goodhart and Campbell’s laws talk about, which can be noticed and exploited by people in control of other parts of the system – the agents.

This means any simplified model used by a regulator can be exploited, especially when the agents understand the model and metrics used. This happens almost everywhere; employees understand the compensation system and seek to maximize their bonuses and promotion, drug manufacturers know the FDA requirements and seek to minimize cost to get their drug approved, and companies know the EPA regulations and seek to minimize the probability and cost of fines. The tension created by the agents is what leads to Goodhart’s theorem; whatever simplifications exist in the model can be exploited by agents.

This brings us to principal agent problems.

When Agents Display Agency

Companies want to align employees around goals, so that the agents are pushing for the same thing the company wants. This is similar to the relationship between authors and characters: authors are the principals, characters are the agents.

In a story, characters are vehicles for the author’s vision, at least in theory. In practice, their given personalities and back stories dictate what can and cannot happen in the story. If the story is realistic, the needs of the narrative take a back seat to reality imposed by the characters. That’s why it’s not completely crazy when an author says something like this:

https://twitter.com/cstross/status/727418292974043136

The last post talked about principal-agent problems a bit. In simple situations, there is a clear solution to be found, using just a bit of calculus. In more complex ones, you may be able to do the same, as long as you can model the needs and goals of all the participants. But as I said, metrics simplify complexity, leading to failure. The complexity, in this case, is that every human is the protagonist of their own story — and if that story doesn’t fit into the bigger story, that’s too bad for the bigger story.

In companies, the discrepancy between the metrics used and the goal isn’t maximized by the agents: the agents aren’t necessarily against the larger goal, they just pursue their own goals, albeit subject to the regulator’s rules. Goodhart said the correlation doesn’t reverse, it simply collapses.

A metric may be an unbiased estimator of the goal over the entire space of possibilities, but there is no guarantee that it is unbiased over the subspace induced by agents’ maximization behaviors. And guaranteeing unbiasedness over a subspace induced by agents maximizing one function doesn’t do anything for guaranteeing unbiasedness for other functions.

In everyday terms, if your agents don’t want the same thing you thought they did, your metrics won’t help. In other words, even metrics that are aligned well with agents whose goals are understood, they are distorted by the agents whose motives or goals are different than the ones used to build the metric. And because all metrics are simplifications, and all people have their own goals, this is inevitable.

Wilson calls the people operators, and has some useful suggestions, but before getting to his points, I need to circle back to clarify why startups don’t have these problems until they start to expand, nor do other small teams that are working together to achieve a common goal. As a company expands, again, we start to need legibility in order to manage it.

The Soft Bias of Underspecified Goals

If you don’t have clear goal, the target you’re aiming for is implicitly underspecified, and could lead to many outcomes. That means your metrics don’t particularly align agents, and you’re very susceptible to Goodhart’s law pulling you towards a system that aligns more with the agents interest than the interest of the regulator. This isn’t quite the same as regulatory capture, but it shares features. But the key point I want to make is that without specific and well-specified goals, metrics take over.

Like people, organizations drift when they don’t have clear goals, and like people, they implicitly look for metrics to justify their decisions. And metrics distort behavior in both directions — as I noted for my behavior using twitter, bad metrics distort actions, but good metrics can do the opposite.

Some parts of organizations do this really well; salespeople have a really clear measure available, which is their sales. With fairly minimal tweaking, this measure can be adopted to account for profitability, aligning the metrics with the actual goal. Extending this just a bit further, commissions are the use of the profitability metric to incentivize behavior.

This isn’t an unalloyed win, though — because organizations are not only about sales, and companies are interwoven systems. Moving one part of the system in isolation warps the rest of the system — hence the constant tension between sales and engineering. This gives us the trope of sales promising desired features at low prices, so that engineers are forced into providing technically inferior products. For evidence of this, see every Dilbert with sales I could find. (Well, except this one and this one, which make some of my earlier points.)

Decoupling different parts of a corporation is a solution suggested to me by Deepak Franklin that addresses the flexibility/legibility tradeoff I discussed in my earlier post, and in theory addresses the tension of how to align people with goals as well, by simplifying the system. That’s a large part of what led me down the path to metrics, and now it should be clear why I think it’s only a partial solution.

In the novel management model known as holacracy, someone must define the roles of the components and their interfaces, then choose metrics and set incentives for each to enable them to cooperate. Interactions between different parts of a system are exactly the challenge that necessitate a regulator, in our earlier terminology.

Segmenting an organization allows for the model to be simplified, and as long as the components actually can operate independently, with clear individual goals and relationships, this should work well. Each region’s sales team may be able to operate independently, with their own goals. In fact, this model does sometimes work — and the manufacturer / distributor / sales model in many parts of retail is a testament to that fact. The retail store doesn’t need to manufacture anything to sell well, and so the incentives can be nicely compartmentalized with contracts between separate companies. The distributors don’t need to care about either, and are paid to move objects safely and in a timely fashion from Point A to Point B.

Ronald Coase and Complexity

The reason this doesn’t always work well is Ronald Coase’s insight; it’s all because of transaction costs. He explained companies exist, and don’t outsource everything to independent contractors, largely because of management.

The functions of management, such as bargaining with employees, coordinating and incentivizing them, and enforcing rules by docking pay or firing people, are easier to execute informally, which reduces transaction costs. Going corporate is a solution because once an organization scales, these tasks are all done more efficiently using a structural model where someone is in charge, rather than a contractual model that formally defines relationships, roles, and rules in detail.

Coase’s model shows a trade-off between consolidation of everything under one roof, with corresponding higher costs for management but more efficient workflows, and allowing markets to provide competition. And the more complex the process is, the more efficient it can be made inside an integrated unit.

Holacracy splits this scheme, allowing for processes to be separated from the management structure, to reduce management costs. Coase’s model would argue that this introduces costs for explicit management of otherwise informal internal business relationships: the worst of both worlds.

The problems that complexity introduces, as formulated earlier, is that the systemic incentives drift away from the goals if the model is not complete and explicit. Holacracy requires clear segmentation and clear goals. Because of this, Holacracy cannot square the circle of needing either bureaucratic management, or explicit models for contracts between parts of the business. Neither can any other faddish scheme that ignores the tradeoffs.

If the model is explicit, game-theoretic optima can be calculated, and principal-agent negotiations can guarantee cooperation. This is equivalent to saying that simple products and simple systems can be regulated with simple metrics and Conant and Ashby style regulators, since they represent the system fully. And in a sense, Goodhart’s law is just principal-agent conflicts in regulatory, as opposed to contractual, domains. Others have noted this relationship, and it makes sense that the two principles were identified around the same time.

Optimizer's curse + principal-agent problem = Goodhart's law.

— Model Of Theory (@ModelOfTheory) September 18, 2016

Holacracy is, in some sense, a return to smaller independent companies that have simpler tasks. And it can be effective in some cases because it forces the company to make sure their goals and metrics align. But you can’t disaggregate your goal if it’s big, hairy, and audacious.

And if your system is complex, good luck segmenting portions off to drift on their own; corporate inertia will kill you.

Back to Wilson’s Organizational Theory

Wilson’s ideas were a move away from high modernism, away from the economic model for understanding organizations from the top-down. His work had a focus on understanding the goals and actions of individual operators, which lead to the systemic biases of bureaucracies. Those biases had been observed for a long time, but focusing on the roles of the operators makes it possible to understand how and why a system doesn’t work the way the designers intended.

Organizations, like individuals, have personalities. Like personalities, they are a function of the environment and temperament, and there are many ways to categorize them, but Wilson suggests a useful 2×2 that explains something I’ve kept coming back to. He notes 2 critical questions for managing an organization; “Can the activities of their operators be observed? Can the results of those activities be observed?” In that vein, he gives examples of government bureaucracies that fall into each category — and his examples are worth the while to examine, but he’s already done that well.

The point I think is critical, however, is that metrics are hard or impossible to get exactly right when the goal isn’t simple. That means that in the left half, an organization’s measurements are inexact and problematic. Similarly, the bottom half are organizations that have a hard time incentivizing the things that lead to the goal. Combining these challenges is particularly difficult — which is why it was so easy to pick on education in my last post.

Wilson has a partial solution, which he calls organizational mission, as distinct from a goal, and I think this is a key point. As he says, “When agency goals are vague, it will be hard to convey to operators a simple and vivid understanding of what they are supposed to do.” The most significant two challenges he notes are complexity, and unclear missions. The first, I argued, is sometimes irreducible; if we can’t disaggregate the tasks, we can’t have a “simple and vivid understanding.” And “managing complex and expansive programs is much harder if they involve highly disparate tasks.”

The second, however, can be remedied. “The great advantage of mission is that… operators will act… in ways that the head would have acted had he or she been in their shoes.” But that requires alignment not of metrics and goals, but of goals and missions.

Self Justification

Lost organizations are full of people who need to justify themselves, and metrics are a really easy way to do so. Because they are lost, those metrics cannot align them with unclear goals. That means that unless there is something everyone is aiming for, the metrics push even people whose goals may align with the company away from that alignment. If the goals aren’t clear, they become secondary to the need for self-justification.

In Calvinball, if the game is “ Q to 12”, you don’t know if you’re winning or losing. That’s the point — being lost doesn’t work if you’re trying to win. You might as well make up meaningless rules trying to gain a temporary advantage: a zero-sum game. And as Abram Demski pointed out to me, this is an even deeper point; Holmström’s theorem shows that when people are carving a fixed pie, it’s impossible to achieve a stable game-theoretic equilibrium and be efficient too, unless you ignore the budget constraints.

The way to cut this Gordian knot is to not be lost, and to make sure your people aren’t playing a zero-sum game. And the easy way to do this is to make sure people can contribute to growing the size of the pie, making it a non-zero-sum game. Creating this non-zero-sum game to serve as a context for goals is the function of the mission; it’s something that everyone wins by furthering.

Meaningful contributions from operators, however, requires instantiation of the organizational mission into a goal, and a meaningful division of the goal into pieces that individuals can contribute towards. And despite the fact that visions are sometimes clear, “goals are hopelessly vague, [and work that should contribute to their attainment is] sadly ineffectual.” The obstacles are clear, even when the path ahead is not. So perhaps the first step in moving towards a vision is to remove the obstacles, clarifying before asking for directions.

If you’re OK with being idly lost, the alternative to clarification and direction is that you’ll end up somewhere, just like Alice. But I think the better place to start comes with a different childhood icon.

“It’s best to know what you are looking for, before you look for it.”

― AA Milne’s Winnie the Pooh

Align Your Metrics!

Hopefully, given the new insight about why it matters, I can usefully repeat the cliche everyone who studies management theory likes to trot out: To motivate a team, you need goals that are clear, and metrics that support them.

That means that your strategy — how you break down the problems and how you plan to remove the obstacles — should be widely understood, and your goals should match a shared mission. And both need to match the systems you’re using to navigate, so that your people align themselves with the strategy.

That isn’t something that happens on its own, but it also isn’t something that can be imposed from above. Instead, it needs a cooperative alignment, matching the the goals with incentives based on useful metrics.

Failure to use metrics well means that motivations and behaviors can drift. On the other hand, using metrics won’t work exactly, because complexity isn’t going away. A strong-enough sense of mission means it may even be possible to align people without metrics.

It makes sense, however, to use both sets of tools; adding goals that are understood by the workers and aligned with the mission, which clearly allow everyone to benefit, will assist in moderating the perverse effects of metrics, and the combination can align the organization to achieve them. Which means ambitious things can be done despite the soft bias of underspecified goals and the hard bias of overpowered metrics.

[1] Daniel Scholten’s paper introduces this idea a bit more gently, and it is both very readable, and very worth reading.

Thanks to Joseph Kelly for helpful feedback on this post, and to Abram Demski for pointing me to Scholten’s paper a couple years ago, and starting me on this line of thought.

Minor correction: “uses competition to harnesses [should be harness] the desires”. Thanks for the very thought-provoking read!

I’ve never commented here before, but I just wanted to say, ribbonfarm is my favorite blog. Quite the collection of talent you guys have got here

This is fantastic, so much food for thought! And if I could make a request I would love to see further thoughts in a future post on your organization-as-a-novel concept. Extrapolating from a short story (startup) to a novel (becoming a larger or complex company) to perhaps a recurring series (a company continuing to transform over decades, to take care of the longitudinal/generational complexity) could be interesting. As a novelist by night and business strategist by day in my mind the two things have always seemed parallel. Lately I’m serving as a general manager building a product line wrapped in a program. My main mental model is to think of this effort as a series of narrative arcs with a main line and sub plots. This model may not be a one-to-one match (fewer hard-boiled detectives, roller-coaster crises and wizards in the business world!) but the basic storytelling organizing logic works for me.

At a macro/enterprise level, I think of the “way to play” or essential pattern a company takes in a market as genre. This then dictates some of the shape of the business model, which leads us to target customers (demographics/readers) and the value proposition, i.e. basic compositional elements like setting, protagonist, plot (I have in fact created a Storytelling Canvas based on Business Model Canvas). Then comes the operating model showing how things are going to get done, structural details like character driven or plot driven, the mirror moment, three-act details, first/third person. Then come the scenes and chapters, where the trick is to split into two mindsets, editor and writer, the one providing structural guidance as far as plot guard rails but allowing local autonomy to the agents or characters. Every scene is a problem that needs solving by the writer but within those guard rails of structure. And the whole thing holds together via structural ratios – balancing exposition with action, details introduced that show relevance later, multiple threads and a sort of rule of thirds placement of critical moments – decisions, actions, crises.

Whatever the pattern the heart of the story is the change or transformation of the protagonist, and that holds true for companies as well (again, it might just be in my head that this makes sense). Just like in traditional storytelling i.e. not counting experimental fiction those structural ratios can serve companies well over the longitudinal perspective, far better than isolated KPIs or metrics.

One example of a structural ratio from marketing: the CMO is pleased because his customer acquisition numbers are up. The EVP of Sales is pleased as well. But the CEO isn’t happy because she sees a trend – the costs of acquisition are going up while new customers aren’t buying as much as previously. It’s not an insurmountable problem but it’s one that only a structural ratio can illuminate. A simpler example is a patient with blood pressure and cholesterol numbers within accepted tolerances – but that patient can’t run up 10 steps without doubling over. The individual KPIs aren’t enough to tell the story of the system, which is where structural ratios can come in handy.

That CEO can see, using selective ratios, what she is getting as far as investing in change (input/efficiency), what’s coming out of those investments in change (output/productivity) and then using a ratio to understand the effectiveness or the delta between output divided by input over time. That’s something that can point to the deeper health of the system and whether she is getting good returns on her investments in change. The trick is in defining the handful of important ratios that track proximate goals inside the longer narrative or mission and what needs to change to make those goals happen.

Interesting thoughts.

Regarding continuing the novel metaphor, my suspicion is that I have little more to wring out of it – certainly more so now that you’ve expanded on it, interestingly. Like all models, it can lend insight, but I’ve said plenty on the limitations of models already!

I also liked your point about structural ratios; they seem like a good addition to the metrics toolkit. I have a background in finance, and ratios are used heavily there for valuation; I think the use other places could learn from the way they are used there. Of course, in addition to all the typical issues with metrics, ratios have added disadvantages of sometimes behaving unintuitively, (especially when the denominator can get small,) or misleadingly, such as when the mismeasurement induced by the two metrics are correlated.

Your post has moved the debate foawrrd. Thanks for sharing!